Unmanned aerial vehicles (UAVs), also known as drones, have already proved to be valuable tools for tackling a wide range of real-world problems, ranging from the monitoring of natural environments and agricultural plots to search and rescue missions and the filming...

Computer Sciences

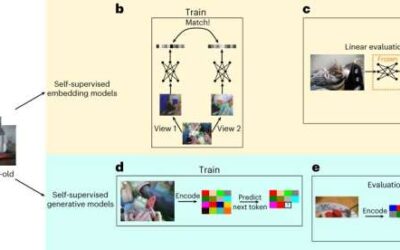

Training artificial neural networks to process images from a child’s perspective

Psychology studies have demonstrated that by the age of 4–5, young children have developed intricate visual models of the world around them. These internal visual models allow them to outperform advanced computer vision techniques on various object recognition tasks.

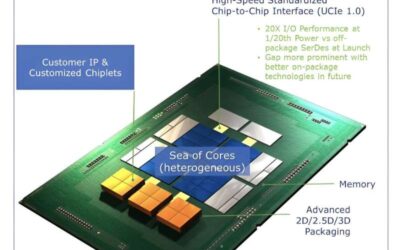

Intel introduces approach to boost power efficiency, reliability of packaged chiplet ecosystems

The integration of electronic chips in commercial devices has significantly evolved over the past decades, with engineers devising various integration strategies and solutions. Initially, computers contained a central processor or central processing unit (CPU),...

The AI bassist: Sony’s vision for a new paradigm in music production

Generative artificial intelligence (AI) tools are becoming increasingly advanced and are now used to produce various personalized content, including images, videos, logos, and audio recordings. Researchers at Sony Computer Science Laboratories (CSL) have recently been...

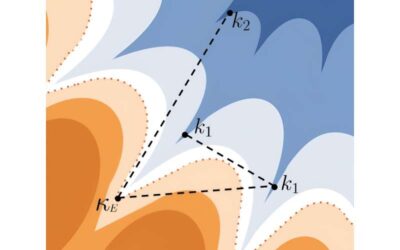

The solution space of the spherical negative perceptron model is star-shaped, researchers find

Recent numerical studies investigating neural networks have found that solutions typically found by modern machine learning algorithms lie in complex extended regions of the loss landscape. In these regions, zero-energy paths between pairs of distant solutions can be...

Can large language models detect sarcasm?

Large language models (LLMs) are advanced deep learning algorithms that can analyze prompts in various human languages, subsequently generating realistic and exhaustive answers. This promising class of natural language processing (NLP) models has become increasingly...

Testing the biological reasoning capabilities of large language models

Large language models (LLMs) are advanced deep learning algorithms that can process written or spoken prompts and generate texts in response to these prompts. These models have recently become increasingly popular and are now helping many users to create summaries of...

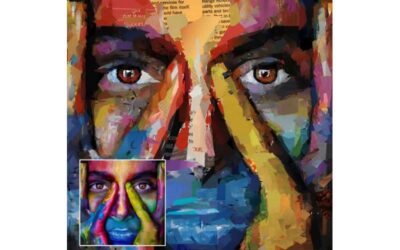

Creating artistic collages using reinforcement learning

Researchers at Seoul National University have recently tried to train an artificial intelligence (AI) agent to create collages (i.e., artworks created by sticking various pieces of materials together), reproducing renowned artworks and other images. Their proposed...

An approach to plan the actions of robot teams in uncertain conditions

While most robots are initially tested in laboratory settings and other controlled environments, they are designed to be deployed in real-world environments, helping humans to tackle various problems. Navigating real-world environments entails dealing with high levels...

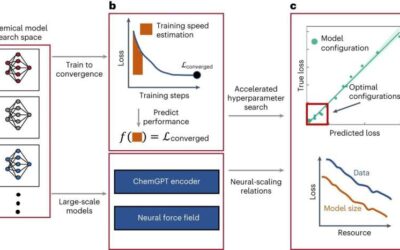

Study explores the scaling of deep learning models for chemistry research

Deep neural networks (DNNs) have proved to be highly promising tools for analyzing large amounts of data, which could speed up research in various scientific fields. For instance, over the past few years, some computer scientists have trained models based on these...